As the year draws to an end, it’s time to compile the exciting discoveries that marked 2010. Here is a snapshot of my top 5, in chronological order:

As the year draws to an end, it’s time to compile the exciting discoveries that marked 2010. Here is a snapshot of my top 5, in chronological order:Study #5: Communicating with the minimally conscious

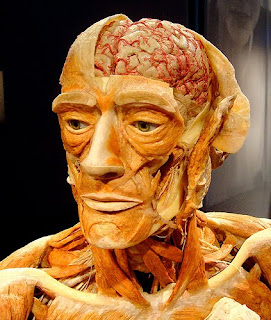

In a nutshell: An imaging technique, functional magnetic resonance imaging (fMRI), allowed researchers to communicate with patients in a vegetative state. Researchers asked yes/no questions, and the patients answered by thinking of playing tennis for yes or thinking of a house for no. The resulting brain signals from these thoughts could be imaged, interpreted and used for communication.

The good: This technique gives great hopes for friends and relatives of patients in minimally conscious states.

The bad: The study was heavily criticized: only one patient was tested, and the technique is far from perfect.

What’s next? We will no doubt hear more about this in the near future. The first step will be to replicate these findings with a greater number of patients.

Study #4: The synthetic cell

In a nutshell: Researchers made synthetic DNA and incorporated it into an empty bacterial cell, thereby creating a fully functioning cell with a man-made genome.

The good: This study represents a technical feat and opens new doors in the fields of molecular and cellular biology.

The bad: Like transgenic organisms before this, the synthetic cell re-opens the Pandora box of ethical questions.

What’s next? Human-engineered cells will probably play a role in gene therapy and in the quest to build tissue in a dish.

Study #3: Brain training doesn’t work

In a nutshell: A study of computerized brain training using over 11,000 participants showed that people improved at the tasks they practiced, but this improvement didn’t extend to general cognition.

The good: This study urges caution when buying into the brain training craze.

The bad: The results may be misleading: it’s not because the researchers didn’t see any improvement that there weren’t any. The brain training could have been inadequate or the researchers could have been measuring the wrong parameters.

What’s next? More controlled studies will be needed to determine the effectiveness of the games. Brain training is likely to become increasingly specialized: training for older adults, for children with autism, etc.

Study #2: Optogenetics

In a nutshell: Researchers were able to control brain cells using light, and rescued symptoms of Parkinson’s disease in mice.

The good: I said it before and I’ll say it again: optogenetics has the potential to revolutionize medicine.

The bad: The technique is quite complex and difficult and so far only possible in small mammals.

What’s next? Researchers are already testing in larger mammals and developing new ways to deliver light into the brain.

Study #1: The slut gene

In a nutshell: Researchers found a relationship between a specific version of a gene and promiscuous sexual behaviors.

The good: The study provides new insights into the link between genes and human behavior.

The bad: It’s not that simple.

What’s next? You can expect more studies of the “this gene does that” type. However, researchers are increasingly interested in how the environment can impact the expression of genes, and the story is bound to get even more complicated.

Looking forward to more gems in 2011!

Happy New Year!